Conversational AI is a branch of artificial intelligence that simulates human conversations.

In order to understand what conversational AI is, it is essential to know the concept of Natural Language Processing and how it has evolved to allow us to talk about conversational AI.

Natural Language Processing

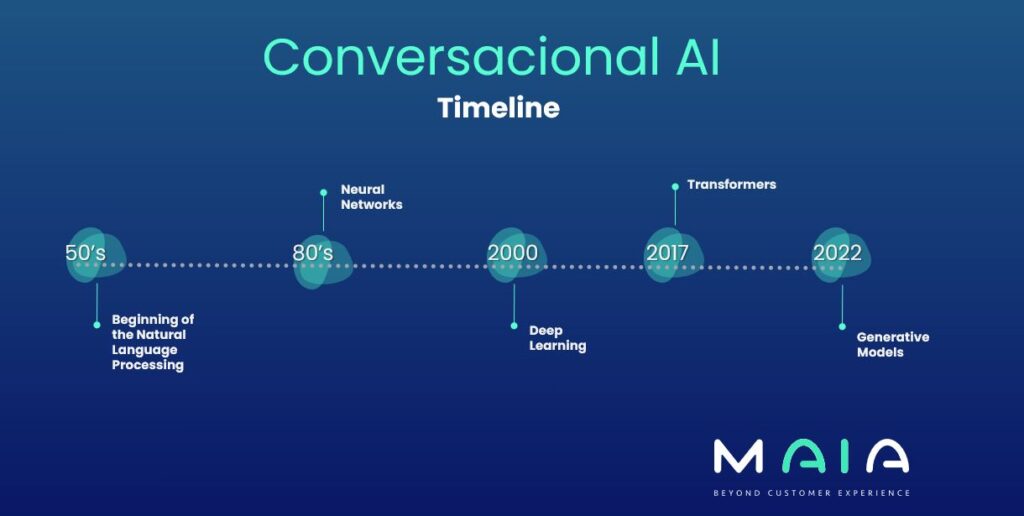

Natural language processing seeks to teach machines to understand, interpret and generate human language responses. Its beginnings date back to the 1950s, when researchers started working with rule-based approaches and formal grammars to analyze and generate text. However, these systems were very limited as they relied on predefined rules and could not adapt to variations or ambiguities of natural language.

In order to solve these problems, important advances have been made in recent years, of which we can highlight some key moments.

Neural Networks

In the mid-1980s, artificial neural networks applied to NLP began to be used. These were inspired by the structure and functioning of the human brain to develop models capable of learning complex patterns and structures from data. As the availability of large amounts of digitized text data and computational capacity increased, machine learning became an essential tool for the evolution of NLP.

Deep Learning

Already at the beginning of the 21st century, NLP underwent a significant transformation thanks to the application of deep algorithms, what we know as Deep Learning. These models improved the ability of systems to understand and generate natural language text, as well as making them more robust and accurate, which is why they began to be applied to tasks such as machine translation, text summarization, text generation or document classification.

Transformers

The big change came with the introduction of Transformers in 2017. The most remarkable thing about this architecture is that it contains an attention matrix that evaluates the relationship of each word with all the other words in the sentence, thus taking into account the whole context and allowing to grasp the meaning of the text as a whole.

Generative Models

And I’m sure by now you’re wondering at what point we’re going to talk about the famous ChatGPT. Well, the emergence of generative language models such as ChatGPT or LLaMa, introduced in late 2022 and early 2023, are based on an architecture containing several layers of Transformers, which implies that they have hundreds of millions of parameters and also require a corpus with billions of words for training. These models have marked a before and after in AI and are generating strong discussions regarding their impact, as their capabilities in terms of text generation are almost comparable to those of a human.

Once we have traced the evolution of natural language processing, it is easier to understand how we have come to develop what we know today as conversational AI. This is based on models that allow the understanding of language and context, as well as the generation of responses according to the conversation and similar to those that a human would give.

Would you like to know what a Conversational AI can do for you? We invite you to meet MAIA.